Vitalik Buterin price TiTok AI for onchain image compression method

The TiTok compression method was developed by researchers from ByteDance and the Technical University of Munich. According to TiTok's research paper, advanced AI image compression technology can compress a 256x256 pixel image into 32 discrete tokens

Ethereum co-founder Vitalik Buterin has endorsed the new Token for Image Tokenizer (TiTok) compression method for its potential blockchain application.

Not to be confused with the social media platform TikTok, the new TiTok compression method significantly reduces image size, making it more practical for storage on the blockchain.

Buterin highlighted TiTok’s blockchain potential on the decentralized social media platform Farcaster, stating “320 bits is basically a hash. Small enough to go on chain for every user.”

The development could have significant implications for digital image storage of profile pictures (PFPs) and non-fungible tokens (NFTs).

TiTok image compression

Developed by ByteDance and Technical University Munich researchers, TiTok allows the compression of an image into 32 small data pieces (bits) without losing quality.

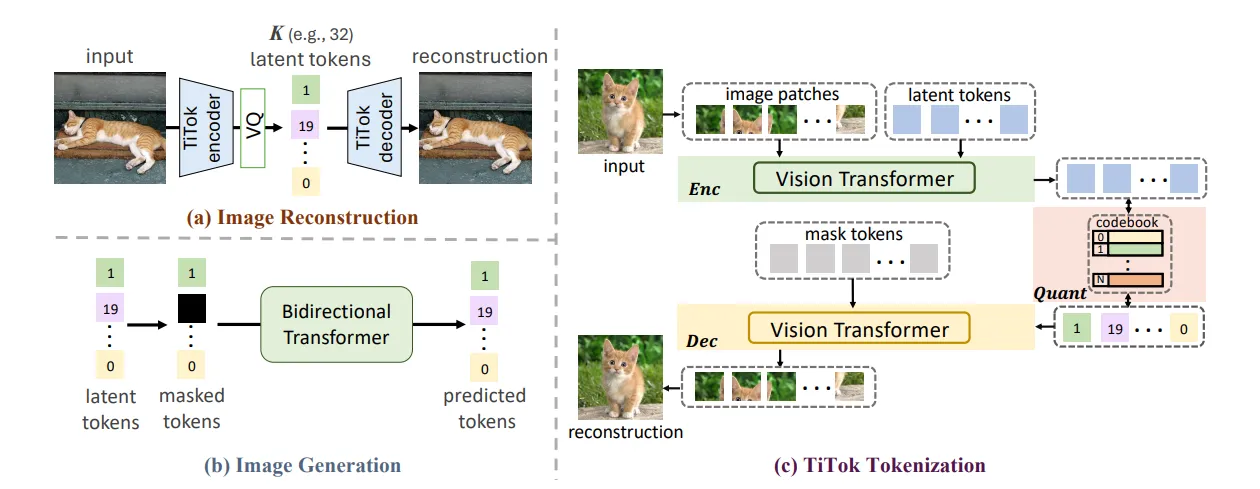

According to the TiTok research paper, advanced artificial intelligence (AI) image compression enables TiTok to compress a 256x256 pixel image into “32 discrete tokens.”

TiTok is a 1-dimensional (1D) image tokenization framework that “breaks grid constraints existing in 2D tokenization methods,” leading to more flexible and compact images.

“As a result, it leads to a substantial speed-up on the sampling process (e.g., 410 × faster than DiT-XL/2) while obtaining a competitive generation quality.”

Machine learning imagery

TiTok utilizes machine learning and advanced AI, using transformer-based models to convert images into tokenized representations.

The method uses region redundancy, meaning it identifies and uses redundant information in different regions of the image to reduce the overall data size of the end product.

“Recent advancements in generative models have highlighted the crucial role of image tokenization in the efficient synthesis of high-resolution images.”

According to the research paper, TiTok’s "compact latent representation” can yield “substantially more efficient and effective representations than conventional techniques.”